Senior Design Team 40 • sdmay25-40 SD Site

Senior Design Team 40 • sdmay25-40 SD Site

Project Overview

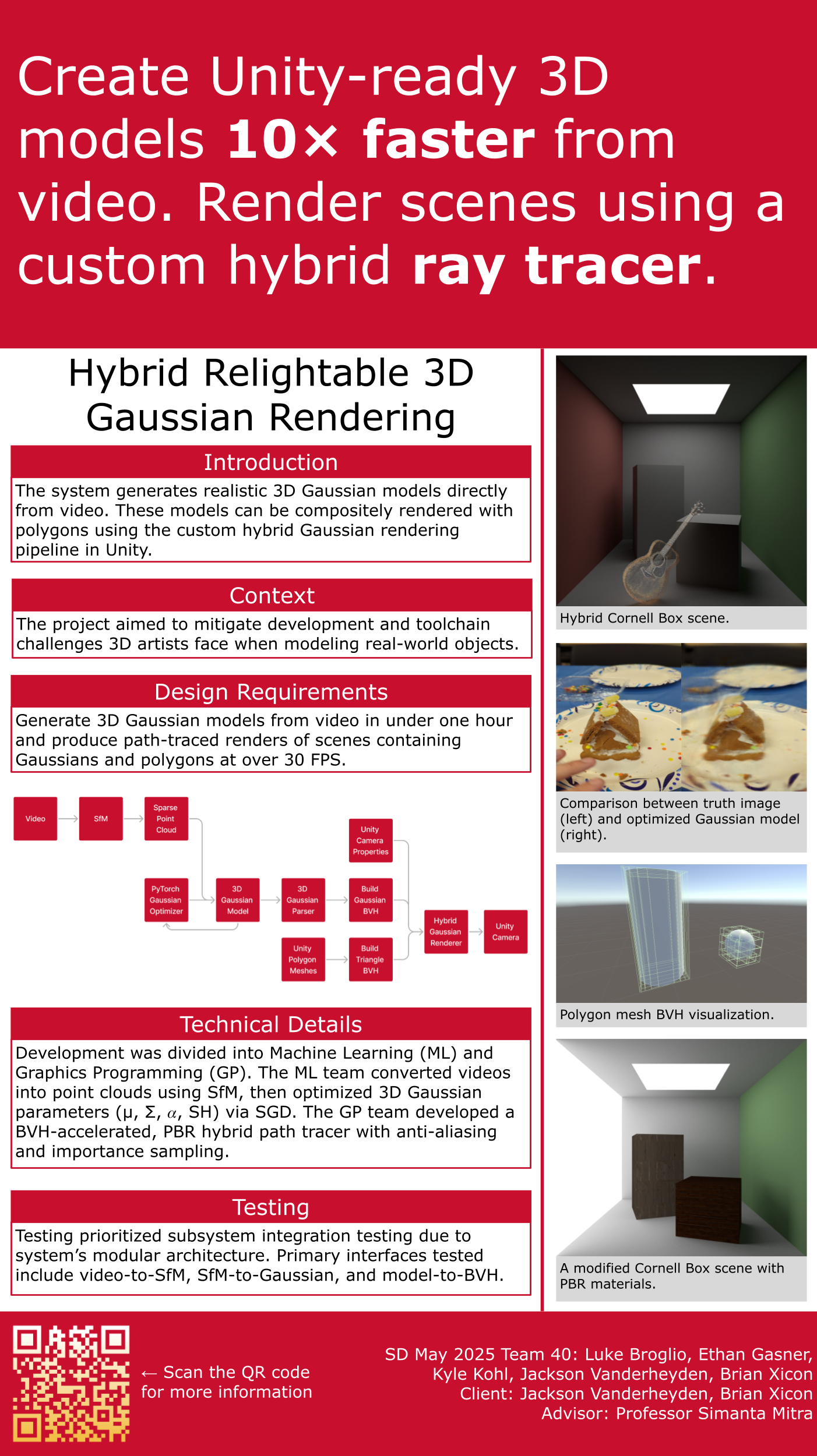

The process begins by capturing a video input and extracting frames to splice into a sequence of images, which are then processed using Structure from Motion (SfM) techniques. This process generates point clouds—collections of 3D points representing the scene's structure. From these point clouds, the system creates Gaussian splats, simplified representations of the scene’s geometry, with each point in the cloud representing a Gaussian distribution in 3D space.

Next, the generated Gaussian points are fed into a PyTorch-based optimizer. This optimizer utilizes machine learning techniques to refine the positions and properties of the points. Additionally, it creates extra attributes crucial for accurate rendering and details. This optimization ensures the final 3D scene is visually realistic and computationally efficient.

The output from the optimizer and its model is then passed through a parser that prepares the data for rendering. This includes creating a Bounding Volume Hierarchy (BVH), a hierarchical structure that accelerates the ray tracing process by organizing the 3D objects in the scene for more efficient intersection tests. For scenes requiring more detailed geometry, users can integrate polygonal meshes (triangle meshes), which are also processed into their own BVH structures, enabling them to coexist and be rendered alongside the Gaussian splats.

The core of the system’s rendering capability lies in its ray tracer. This component takes the BVH data (whether Gaussian or triangle mesh-based). It combines it with the Unity camera to calculate how light interacts within the scene, ultimately producing realistic images. At path intersection points, each hit is inserted into a sorted list of intersections. Post insertion, we loop through the sorted hits list, keeping track of the paths' transmittance as it passes through translucent materials. Once the path’s transmittance value reaches a minimum value, we cull the remaining list and consider that the point of intersection. The ray tracing process accounts for various optical effects, such as shadows, reflections, and global illumination, ensuring the final output is both physically accurate and visually compelling.

Problem Statement

The technique of generating images of a scene from any perspective using only a video or a series of photographs is known as novel view synthesis. Previously, this was done using Neural Radiance Fields (NeRFs). This method has costly training and rendering times, can produce subpar results, and cannot be combined with traditional polygon meshes.

Our solution is to create a novel view synthesis system using a new technique called 3D Gaussian Splatting (3DGS). This solution is significantly faster and creates higher-quality renders than NeRFs, but one lingering issue remains. Standard 3DGS models are not compatible with industry-standard polygon meshes.

To solve these issues, we built off the work of Relightable Gaussians and created a ray-traced rendering system that seamlessly integrates triangle and Gaussian models. This system will allow users to change the scene's lighting in real-time, take advantage of global illumination features such as reflections and shadows, and add traditional polygon meshes into the scene.

This system will be beneficial in various circumstances, including game development and 3D art, as part of sales to allow customers to see an accurate and high-quality depiction of a product, and as an improvement for current NeRFs or photogrammetry systems.

Executive Summary

Our program was to split our project into two parts: the machine learning part and the graphics path tracer part. The machine learning part was broken down into Structure-For-Motion and the Optimizer. The Structure-For-Motion code took the video of an object and transformed it into Gaussian points in the form of a ply file that was then passed into the Optimizer code. The Optimizer code took the cloud of Gaussian points and, using a PyTorch ML model, extracted data from the points. This data was then passed to the Unity path tracer code in the form of a .ply file.

With this information, the Unity side was able to create a 3D Gaussian object to raytrace. Our design meets the standard need for high-quality 3D Gaussian models. The requirements for anyone running our program would be that they have Unity installed and an NVIDIA graphics card to run the ML. With these two requirements, it doesn’t reach everyone, but we believe that anyone who would like to use our program would most likely meet the requirements already.

The next steps of our projects would be to increase performance, get it to run faster and produce higher quality 3D models, and add more features to the scope.

Team Members

Jackson Vanderheyden

Graphics Scope Manager

The skills, expertise, and unique perspectives Jackson Vanderheyden brings to the team are: computer graphics industry experience, multiple Unity projects, & previous experience implementing ray tracing.

Brian Xicon

Machine Learning Scope Manager

The skills, expertise, and unique perspectives Brian Xicon brings to the team are: C, C+ +,HTML, CSS, JS, Python, PyTorch experience, Machine Learning experience, and Computer Vision experience.

Luke Broglio

Schedule Manager

The skills, expertise, and unique perspectives Luke Broglio brings to the team are: Experience wth C, C++, python, graphics programming, Unity, HTML/CSS, Javascript, writing a raytracer and experience with working in agile/scrum development environments.

Ethan Gasner

Documentation Manager

The skills, expertise, and unique perspectives Ethan Gasner brings to the team are: C, C++, python, Ai Experience, Machine learning experience, Unity Experience, unique Cyber security perspective, Javascript (+html/css). additionally, a cooperative and easy going attitude.

Kyle Kohl

Communication Manager

The skills, expertise, and unique perspectives Kyle Kohl brings to the team are: C, C++, Java, Python, a little bit of HTML and CSS. He has the definite advantage of being an extrovert that loves to encourage others. Lots of experience in the communication role.

Documents

Design DocumentFinal Presentation

Context and Techniques Document

Demo

Design Process Slides

Product ResearchProblem and Users

User needs and Requirements

Project Planning

Detailed Design

Design Check-In

Prototyping

Ethics and Professional Responsibility

Reports

Report 1 || (09/13-09/19)Report 2 || (09/20-09/26)

Report 3 || (09/27-10/03)

Report 4 || (10/04-10-10)

Report 5 || (10/11-10/17)

Report 6 || (10/18-10/24)

Report 7 || (10/25-10/31)

Report 8 || (11/01-11/07)

Report 9 || (11/08-11/14)

Report 10 || (11/15-11/22)

Report 11 || (1/16-1/30)

Report 12 || (1/31-2/13)

Report 13 || (2/14-2/27)

Report 14 || (2/28-3/13)

Report 15 || (3/14-4/3)

Report 16 || (4/4-4/17)

Spring Sprint Reports

Sprint Report 1Sprint Report 2

Sprint Report 3

Sprint Report 4

Sprint Report 5

Sprint Report 6